Week 08: In-Class Assignment:

Neural Networks with sklearn#

✅ Put your name here.#

✅ Put your group member names here.

This ICA is due at 11:59PM today.

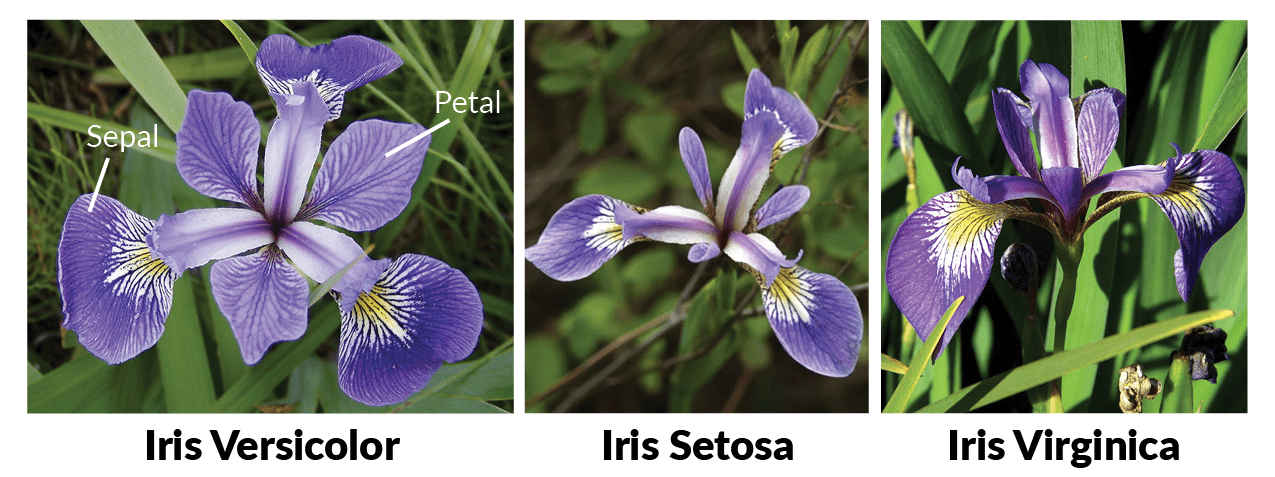

The goal of this ICA is to learn how to determine and quantify the impact of some of the stages of a machine learning project. In particular, we want to understand the impact of splitting data, scaling and transforming data. In order to do this, you will use a dataset that you are familiar with, the good old iris dataset.

It is important that your group break into subgroups to carry out parts in parallel. For example:

one person will use the data exactly as in this notebook,

one will comment out a few lines and

other group members will make a small transformation on the data.

The modifications are all straightforward, but you probably won’t have time to do everything sequentially - have some group members jump ahead so they are ready.

For example, today you will explore how important or not scaling the features is: it would be easiest if a subgroup of your group simply copied this notebook and removed the code that does the scaling.

The machine learning techniques that you will use are the perceptron and its multilayer version to tackle deep learning. This Artificial Neural Network will be in the form of a perceptron, and you will use sklearn’s libraries. No need to write your own SGD code! (Un)fortunately, this doesn’t leave much for you to do!

Having nice sklearn libraries means that you can focus on some new ideas that are extremely important. Those ideas are related to how you use ANNs for real applications. There are many tricky issues that come with using ANNs and you will learn to navigate those today. These ideas are:

the train-test split process, (overfitting is a real possibility)

rescaling the data, (scaling is very important for ANNs)

obtaining a quantitative metric for accuracy.

The goal of this mini machine learning project is to classify the three species of iris.

Before we get started, let’s pull in some libraries. Take a look at what is here - there are some new sklearn libraries we have not used yet.

import matplotlib.pyplot as plt

import numpy as np

import seaborn as sns

import pandas as pd

from sklearn import datasets

from sklearn.preprocessing import StandardScaler

from sklearn.linear_model import Perceptron

from sklearn.model_selection import train_test_split

from sklearn.metrics import accuracy_score

Part 1. Exploratory Data Analysis#

Great. Now, let’s bring in the data. Since we want to focus on real-world issues with using ANNs, let’s use a dataset that we are already somewhat familiar with: iris. Before moving on, recall the properties of this dataset: how many features? How many labels? What type of ML problem is this?

# grab the nice dataset from sklearn

iris = datasets.load_iris()

# separate the features and the labels

X = iris.data

y = iris.target

✅ Task: This is a good time for you and your group to pause and think about what you would do in the real world. Do you know what the shapes of \(X\) and \(y\) are? Are you sure how to plug them into sklearn? Stop now and discuss this, and perhaps make a few visualizations (e.g., seaborn pairplot).

Are the features all roughly of the same size?

Do they have the same variance?

Is the data in a random order, or ordered in some way?

Recall that we have already done some EDA on iris during the Statistics week.

Print out some descriptive statistics

What information do boxplots and boxplots give you and how do you plan on using it?

# Put your code here

Part 2. Test-Train Split#

In all of the ML you will ever do you will need to check whether you have trained well and whether you have overfit your data. The basic idea is to separate the data into two pieces: one that you will train with and one that you will test that training on.

As you already know, this is so important that sklearn makes this easy for you. Take a few moments to read the documentation on model_selection, and train_test_split in particular, to understand what they do for you, how you use them and what the various options are. Click here to see the vast tools available to you in sklearn.

✅ Task: Split your training and testing dataset. Are you going to use the simple train_test_split or do you need to use StratifiedShuffleSplit?

# Put your code here

Part 3. Scaling the Data#

Next, we are going to prepare the data. In general in data science this could be a long and arduous process, as you will likely learn in your projects: bad data, features that need to be transformed, and so on. Here, we want to focus on a related issue that is closer to ML itself and that is the relative size of the values of the features.

Consider for example an ML alogorithm that uses the covariance. The size of the result (e.g., variance of a particualr feature) depends on the magnitude of the values in the data, as it should. But, suppose that before you got the data someone scaled one of the features by a factor of a million because they wanted to measure, say, distances in microns rather than meters. Hopefully your ML approach is immune to what units the data are in! As it turns out, many ML approach are extremely sensitive to this magnitude of the data issue so, once again, sklearn has a library built in to help you.

✅ Task: Scaled your training set using StandardScaler.

# Put your code here

✅ Task: With the scaler defined, we can now apply it to the data itself. Print out some of the statistics of the new transformed data and compare it with the original one.

# Put your code here

✅ Question: What do you notice? What is the mean before and after? Is it zero ? Why or why not? How about the standard deviation and the variance?

✎ Put your conclusions here!

✅ Task: Make plots showing the distribution before and after scaling. Show visually what has been done. One way to do this is to slice two of the features so that you can make a 2D plot. (Recall that the iris data is 4D.)

# Put your code here

✅ Question: Discuss with your group members the choice for using the same scaler on both the training and testing data even though you fit the scaler with only the training data. Does that make sense to you? Why or why not?

✎ Put your conclusions here!

Now, pause for a moment and think about what you just did. All of the steps you took above have nothing to do with ANNs: these steps are potentially extremely important for any ML application.

Have one person in your group copy the code above and simply remove all of the scaling steps. (Hints: you can comment the scaling steps using

#, or just remove those cells, or make new arrays.) You will run both sets of data through the perceptron to see the difference scaling makes.Another group member copies the code exactly as it is and scales one of the features by a very large or very small number, perhaps mimicing one of the lengths being in another unit system. (Hint: use Numpy to multiply one column by a factor. What does this

A[:,1] *= 5.2do?)

To be clear, what you are doing is three things:

using the raw data,

using the data in a “worse form” that you might have started with,

using the data in a better form that has been scaled using

sklearn.

Part 4. Machine Learning#

Perceptron#

Finally, let’s do some ML! As with most libraries in sklearn, the entire learning takes place in two lines.

✅ Task: Instantiate a Perceptron object and then call its fit method on the data.

# Put your code here

✅ Task: Using the web, discuss in detail what the inputs to Perceptron are: eta0, random_state and so on. What options are available, and what are the typical defaults? Note that Perceptron prints out what it used. Discuss each of those options with your group. (Some of these options we have yet to discuss in class.)

✎ Put your conclusions here!

Part 5. Analyzing the Output#

You may have noticed that the fit method didn’t output anything interesting, this is pretty unsatisfying - it did its job, but we are left with nothing!

Now the fun starts! Let’s take a look at what it did!!

The most obvious next step is to see if our trained perceptron can make good predictions - did it learn anything?!

Luckily, we saved some data aside that we can work with.

✅ Task: Run your Perceptron on the test dataset and check how accurate it is.

# Put your code here

Part 6. Play#

Now, it is time for you to play.

First: Run the other two datasets (no scaling and scaling one column) through to see what scaling the data does. Did the scaling change the accuracy? Open a markdown cell and discuss your observations.

Next: turn to varying many of the other choices, including making this an MLP - see code below.

Vary as many things as you can and comment on all of the discoveries you make. For example,

what if you change how much (percentage) you split the data?

what if you change the number of iterations?

what are the penalties? what impact do they have?

and so on…. see below….

In another markdown cell, write down what seems to work really well. (And, you can use the code below if it helps!)

Part 7. Multi Layer Perceptrons#

In the time that remains, you will explore the MLP.

✅ Task: Rerun your code using a MLP classifier this time. What do you notice? Which classifier is better? Why or why not? How can you improve the worst classifier?

# Put your code here

✅ Question: What do you notice? Which classifier is better? Why or why not? How can you improve the worst classifier? Again, vary the inputs (scaled or not) and the MLP options sklearn gives you. Summarize what you find in a new, final markdown cell.

✎ Put your conclusions here!

© Copyright 2023, Department of Computational Mathematics, Science and Engineering at Michigan State University.