Homework 3: Loading, Cleaning, Analyzing, and Visualizing Data with Pandas (and using resources!)#

✅ Put your name here

#Learning Goals#

Using pandas to work with data and clean it

Make meaningful visual representations of the data

Fitting curves to data and evaluating model fits

Rubric#

Part 0 (2 points)

Part 1 (22 points)

Part 2 (20 points)

Part 3 (16 points)

Total: 60 points

Assignment instructions#

Work through the following assignment, making sure to follow all of the directions and answer all of the questions.

This assignment is due at 11:59pm on Friday, November 1, 2024

It should be uploaded into D2L Homework #3. Submission instructions can be found at the end of the notebook.

Part 0. Academic integrity statement (2 points)#

In the markdown cell below, paste your personal academic integrity statement. By including this statement, you are confirming that you are submitting this as your own work and not that of someone else.

✎ Put your personal academic integrity statement here.

Before we read in the data and begin working with it, let’s import the libraries that we would typically use for this task. You can always come back to this cell and import additional libraries that you need.

import pandas as pd

import matplotlib.pyplot as plt

import numpy as np

from scipy.optimize import curve_fit

Part 1: Reading, describing, and cleaning data (22 total points)#

While researching polution and air quality, you find a dataset compiled by the World Health Organization\(^{1}\). You want to explore the dataset to see what insight and conclusions you can gain from this data.

\(^{1}\)WHO. WHO Ambient Air Quality Database (update 2024). Version 6.1. Geneva, World Health Organization, 2024

1.1 Read the data (2 point)#

✅ Task#

Read in the data from who_ambient_air_quality.csv into a Pandas dataframe (1 pt) and display the head and tail of the data (1 pt).

NOTE: There are 40098 rows of data. If you get a lot of NaN rows at the end of the dataframe, use the nrows argument in read_csv to control that.

## your code here

That’s a lot of data! Let’s break this down into something more manageable and meaningful.

1.2 Describe the data (5 points)#

The columns in this dataset represent the following:

who_region: The region where the data was collectediso3: Shorthand for the country where the data was collectedcountry_name: The country where the data was collectedcity: The city where the data was collectedyear: The year the data was collectedversion: The version of the study in which the data appearedpm10_concentration: Concentration of particulate matter below 10 micrometers (PM\(_{10}\))pm25_concentration: Concentration of particulate matter below 2.5 micrometers (PM\(_{2.5}\))no2_concentration: Concentration of nitrogen dioxide (NO\(_{2}\))pm10_tempcov: Percentage the year that measurements were obtained, when available, for PM\(_{10}\)pm25_tempcov: Percentage the year that measurements were obtained, when available, for PM\(_{2.5}\)no2_tempcov: Percentage the year that measurements were obtained, when available, for NO\(_{2}\)type_of_stations: Type of station that obtained the measurementreference: Source of the data, if not directly measured by WHOweb_link: Link for where the data was obtained, if applicablepopulation: Population of the city where measurements were takenpopulation_source: Where the population data was obtained, if applicablelatitude: Latitude of the city where measurements were takenlongitude: Longitude of the city where measurements were takenwho_ms:

1.2.1 (1 point)#

✅ Task#

Use describe to display several summary statistics from the data frame. (Remember that this will only display statistics from numerical data. describe will ignore text entries.)

## your code here

1.2.2 (4 points)#

✅ Task#

Using the results from describe above, write in the markdown cell below:

The earliest and latest

yearrepresented in this study (1 pt)The smallest and largest values for

population(1 pt)The smallest and largest values for

pm10_tempcov. (1 pt) Using what you know about this column, does this make sense? (1 pt)

✎ Put your answer here:

1.3 Isolating and performing basic statistics on data (4 points)#

1.3.1 (1 point)#

✅ Task#

Display the pm10_concentration column on its own using the name of the column.

## your code here

1.3.2 Displaying Data (1 point)#

✅ Task#

Using .iloc, display the first five rows of just the pm10_concentration column.

## your code here

1.3.3 Descriptive Statistics (2 points)#

✅ Task#

Using mean and median functions, print out the mean and median pm25_concentration values for all the data.

## your code here

1.4 Understanding the data (4 points)#

✅ Task#

Go to the website for the data. You should see a brief description of the data.

State the limitations for the data that are listed (1 pt).

In your own words, describe why these limitations exist (3 pts).

✎ Put your answer here:

1.5 Filter the data using masking and dropna(5 points)#

1.5.1 Masking the Data (2 points)#

✅ Task#

Based on the limitations for the data, you know that you shouldn’t directly compare data between countries. You decide to start by looking at the data for just the United States.

Using an appropriate mask on the dataframe, make a new dataframe that only includes data from the United States (2 pts)

## your code here

1.5.2 Cleaning Data (3 points)#

✅ Task#

Suppose you want to model the relationship between PM\(_{10}\), PM\(_{2.5}\), and NO\(_{2}\). Therefore, you only want data that have results from pm10_concentration, pm25_concentration, and no2_concentration.

Drop the rows that do not have measurements in any of the following columns: pm10_concentration, pm25_concentration, and no2_concentration. If you are having trouble, you might find the dropna command and the subset argument helpful. (3 pts)

## your code here

1.6 Reflections (2 points)#

✅ Task#

Document your pathway to a solution for Question 1.5 (masking and dropping NaNs). (1 pt)

Describe how you know your code in Question 1.5 is working. (1 pt)

✎ Put your answer here:

Part 2: Exploratory Data Analysis (20 total points)#

It’s time to explore our data! Let’s visualize our data and look for correlations in our US air quality dataset from part \(1\) above.

Part 2.1: Correlations task (5 points)#

Suppose you want to study the relationship between the annual mean concentrations of the pollutants PM\(_{10}\), PM\(_{25}\), and NO\(_{2}\), as it relates to air quality in the United States. Our dataset contains columns with numerical values and some with string values. We can only look for correlations between columns that have numerical values.

✅ Task 1 (2 points):#

Print or display a correlation matrix using only the columns pm10_concentration, pm25_concentration , and no2_concentration. Hint1: Look up the pandas corr function for dataframes. Hint2: To select multiple columns, use the following notation: df[["col1", "col2"]]

# Put your code here

✅ Task 2 (3 points):#

For the correlations between each pair of columns, describe the direction of the correlation in plain words (3 sentences, one describing each pair) (3 pts, 1 pt each).

✎ Put your answer here

Part 2.2: Visual representation of correlations (15 points)#

The numbers above gives us a quantitative measure of the correlations in the dataset. As you can see, pm10_concentration, pm25_concentration, no2_concentration all have correlative relationships with each other, regardless if these relationships are strong or weak.

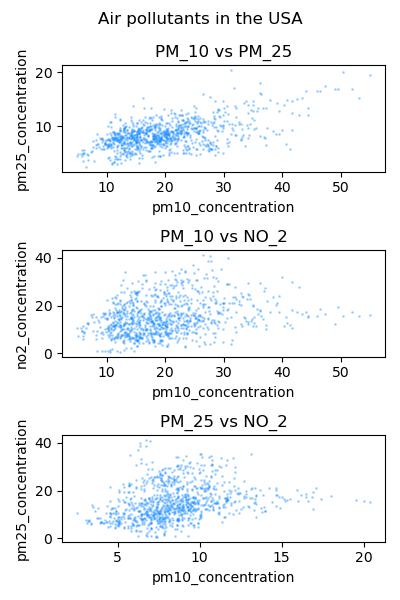

Visualization in data science is a very important skill!!! In the next exercise, you will visualize the relationships between these variables as scatterplots. You will create a plot for each relationship: 1) pm10_concentration versus pm25_concentration , 2) pm25_concentration versus no2_concentration, and 3) pm10_concentration versus no2_concentration.

We want to make a plot similar to the one below:

✅ Task 1 (11 points): Use matplotlib to make three scatter plots like the ones above. For full points, do the following:#

Create 3 subplots using

plt.subplot. Use 3 rows and 1 column (2 points).Use

plt.figureand the argumentfigsizeto make a plot 4 inches wide x 6 inches long (1 point).Plot 1)

pm10_concentrationvs.pm25_concentration, 2)pm25_concentrationvs.no2_concentration, and 3)pm25_concentrationvs.no2_concentration. Provide a title for each subplot (1 point).Give the overall plot the title

Air pollutants in the USA(1 point)Provide x-axis labels (1 point).

Provide y-axis labels (1 point).

Create variables for point size, color, and alpha outside of the code for your plots. Use the variables you created to control size, color, and alpha in your subplots. Use the same parameters for all the plots. Choose appropriate point size, color, and alpha so that you can see the relationships in your data (avoid overplotting) (3 points, one for each).

Use

tight_layoutto give your plot optimal sizing (1 points).

#put your code here

✅ Task 2 (4 points): Looking at the plots, answer the following questions#

Which plots looks like it has the strongest correlation? Justify your answer (1 point).

Which plot looks the most linear? (1 point).

Which plot do you believe would be the easiest to create a model for? Justify your answer (1 point).

Do you believe your graphs in part \(2.2\) correspond with your correlation figures from part \(2.1\)? Justify your answer. (1 points)

✎ Put your answer here

Part 1 (1pt):

Part 2 (1pt):

Part 3 (1pt):

Part 4 (1pt):

Part 3: The Ukulele Detective (15 points)#

You are listening to someone play ukulele and you want identify what note they are playing. Fortunately, you have your trusty data collector that can tell you the amplitude of the sound at a given time and you brought your computer to analyze the data with Python.

Your task is to take the sine wave model for notes,

\(y=A \mathrm{sin}(2\pi f t+\phi)\)

and fit it to your data to find the best fit parameters for \(A\), \(f\), and \(\phi\) using curve_fit and identify what frequency of note is being played using your best fit value for \(f\).

If you don’t know what those parameters are, do some googling/research to find out. If you still can’t figure them out, come to office hours or talk to your instructor.

3.1 Reading in Data and Plotting (2 points)#

✅ Task#

With this homework, you will find a data file called ukulele_data.csv. In the cell below, load in the data and plot it with time on the x-axis and amplitude on the y-axis.

# Put your code here!!

3.2 Setting up your model for fitting (2 points)#

✅ Task#

To use curve_fit, you need to define your model as a Python function. Create a Python function using the sine wave model from above.

# Put your code here!

3.3 Using curve_fit (5 points)#

✅ Task#

Now use curve_fit to identify your best fit parameters and plot the data and best fit together.

# put your code here!

# Initial Guess guidance

# A_guess = # A is the amplitude, so pick a value that is roughly the average of the amplitude

# f_guess = 400.0 Hz

# phi_guess = 0.0

3.4 Making Conclusions (2 points)#

✅ Task#

What note is the ukulele playing playing? Justify that this makes sense by doing some research about ukuleles!

✎ Put your answer here.

3.5 Documenting your solution pathway (5 points)#

✅ Task#

Describe in detail how you solved the problem including any external resources you consulted (e.g. generative AI prompts and outputs, past assignments, Code Portfolio, Stack Overflow, Google, etc.) and how they informed your approach. You must also describe how you know your solution works (e.g. and calculations you made, tests you ran, etc.)

If you did not use external resources, describe your thought process for solving the problem by answering the following questions:

How did you know where to start?

What prior knowledge did you recall to solve the problem?

How do you know that your solution works?

✎ Put your answer here.

### RUBRIC

# 5 out of 5 for a detailed answer that covers prompts/outputs and how they informed approach AND how they know solution works

# 5 out of 5 for answering the 3 questions in detail if they did not use resources

# 2 out of 5 if they are missing any one item

# 0 out of 5 if they are missing more than one item

Congratulations, you’re done!#

Submit this assignment by uploading it to the course Desire2Learn web page. Go to the “Homework Assignments” section, find the submission folder link for Homework #3, and upload it there.

© 2024 Copyright the Department of Computational Mathematics, Science and Engineering.